Google’s Pause on Gemini AI’s Image Generation

Unveiling Google’s Gemini AI: A Temporal Pause

In a move reflective of the ever-evolving landscape of artificial intelligence, Google has chosen to temporarily halt a distinctive feature within its Gemini AI. This decision was prompted by a cascade of criticisms and concerns regarding the intricacies of generated images and the burstiness in historical contexts.

Criticisms and Controversies: The Burstiness Unveiled

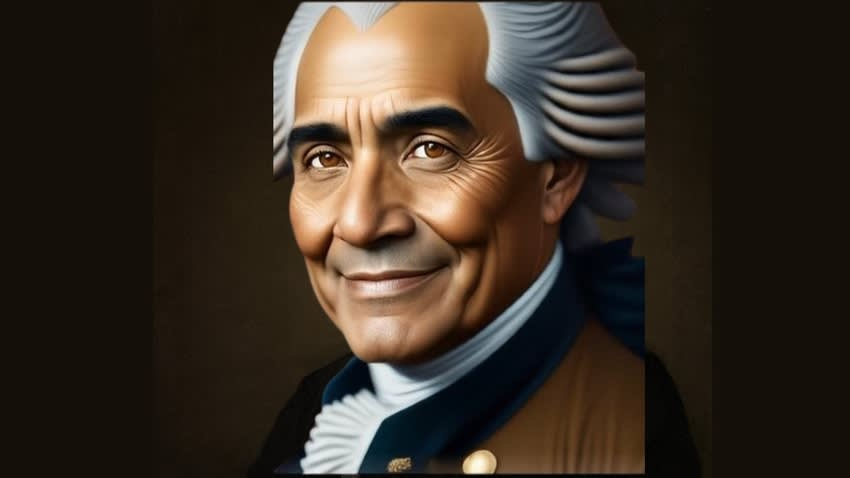

Gemini, in its image generation prowess, faced a barrage of critiques. Detractors highlighted inaccuracies and potential biases embedded in the images it produced. Singularly notable were instances where historical accuracy was compromised, depicting people of color in scenes where their presence would defy historical accuracy. This generated a discourse on the potential perpetuation of stereotypes rooted in biases present in the AI’s training data.

The controversy escalated with concrete examples, notably the creation of racially diverse images within historically white-dominated scenes. This led to accusations of over-correction for racial bias, with images depicting a diverse range of individuals, including Nazis. Such historical inaccuracies triggered a substantial backlash across social media platforms and specific communities, emphasizing the importance of burstiness and diversity in AI-generated content.

Google’s Acknowledgment and Commitment

In response to the mounting criticisms, Google acknowledged the challenges. The intention behind Gemini’s diverse outputs was deemed positive, yet the execution “missed the mark” in certain historical depictions. This acknowledgment paved the way for Google’s commitment to enhancing Gemini’s image generation capabilities.

Google emphasized the significance of accurate and sensitive portrayals, not only across different races but also genders and various historical contexts. The tech giant is actively refining the algorithms governing Gemini, with the aim of minimizing skewed outputs and eradicating historical inaccuracies. This commitment underscores the broader challenge within the AI field – mitigating bias and integrating ethical considerations into AI models.

The Broader Discourse: AI, Bias, and Ethical Considerations

Google’s decision to pause and scrutinize Gemini’s people generation feature ignited a profound debate. This extends beyond the specific case, delving into the pervasive challenges of mitigating bias in AI models and the ethical considerations surrounding AI-generated content. It serves as a poignant reminder of the ongoing efforts by technology companies to navigate the intricate interplay between innovation, representation, and social responsibility within the perplexity of the AI landscape.

Google Gemini: Everything you need to know

What Is Perplexity AI And How To Use It